Check out our latest paper: explaining human behavior with deep nets and oracle models

We perceive and recognize objects with ease; however, the computational task performed by the brain is far from trivial. Until recently, computational models of object vision failed to even come close to human object-recognition performance. However, this changed with the advent of deep convolutional neural networks. Deep neural nets are loosely inspired by the human brain, and consist of units (“neurons”), organized in multiple layers (“brain regions”), connected with weights that can be modified by training (“synapses”).

Despite the impressive performance of deep neural nets at object recognition, it is unclear whether they are good models of human perception and cognition. How well do deep neural nets capture human behavior on more complex cognitive tasks? And how does their performance compare to that of non-computational conceptual (“oracle”) models?

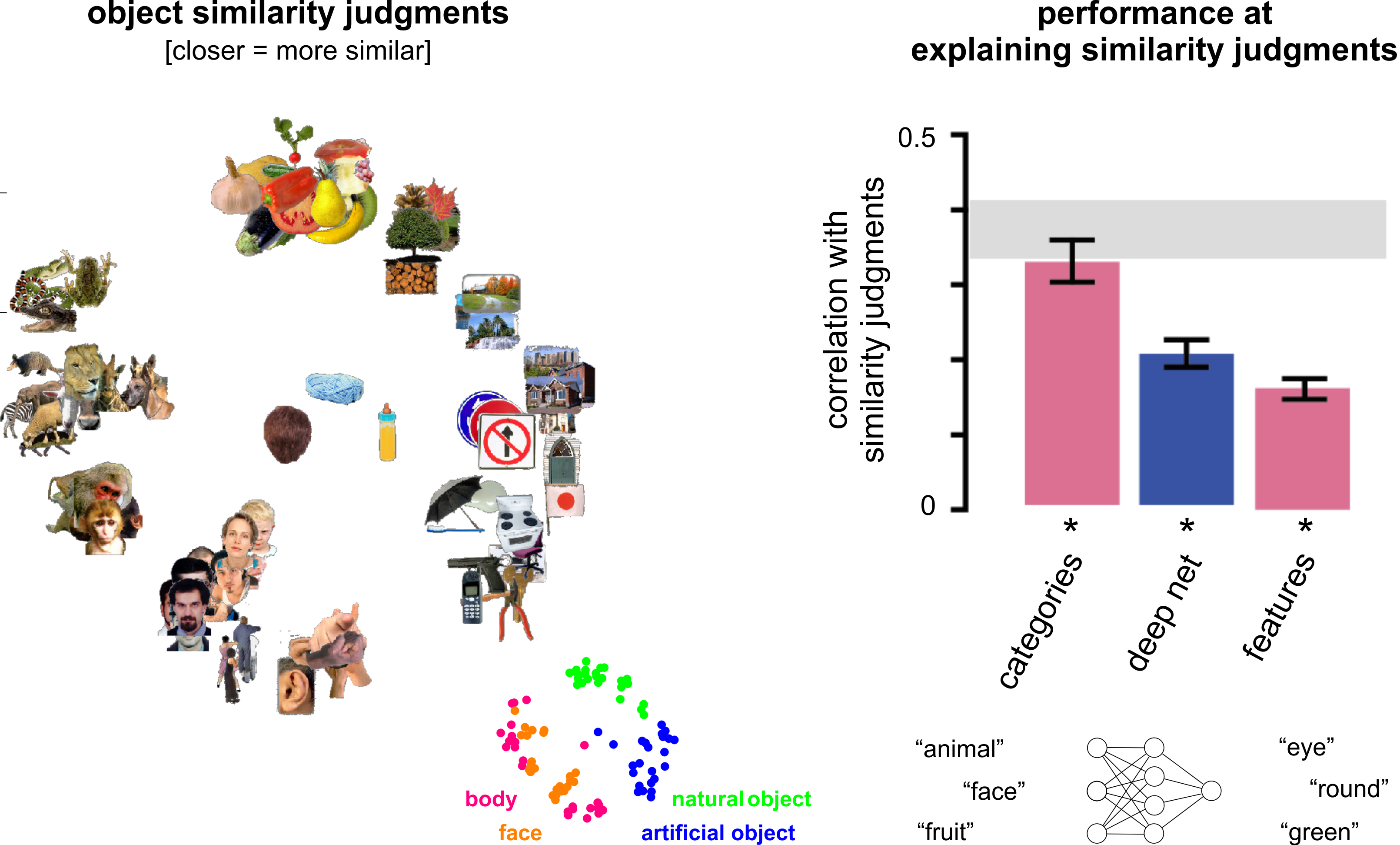

We address these questions using a well-established cognitive task – judging object similarity. Human observers performed similarity judgments for a set of 92 object images from a wide range of categories, including animate (animals, humans) and inanimate (fruits, tools) objects. We tested how well the judgments could be explained by internal representations of deep neural nets, as well as by feature labels (e.g. “eye”) and category labels (e.g. “animal”) generated by human observers (who serve as an “oracle” here).

We show that deep neural nets, despite not being trained to judge object similarity, can explain a significant amount of variance in the human object-similarity judgments. The deep nets outperform the oracle features in explaining the similarity judgments, suggesting that they better capture the object properties that give rise to similarity judgments than feature labels do. However, the deep nets are outperformed by the oracle categories, suggesting that they fail to fully capture the higher-level, more abstract categories that are most relevant to humans. By comparing object representations between deep nets and simpler oracle models we gain insight into aspects of deep nets that contribute to their explanatory power, and into those that are missing and need to be improved.